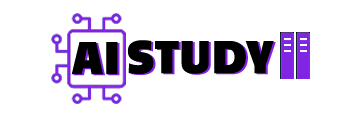

I made one simple workflow that does a proper swap. Pick your source video. Pick one image of your subject. Mark the object to replace. Run. Faces stay stable, the motion looks natural.

What this workflow does

- Swaps one object or person in the video with your subject image

- Keeps the rest of the scene as it is

- Uses point-based masking, a 3-sampler chain, and gentle LoRA weights

- Works with FP8 and Q5 GGUF builds on low VRAM

The quick demo

Scene

A park. An alien is eating a burger. A bodyguard is there. An older man sits with a newspaper. He smiles and looks at the alien.

Goal

Replace the alien with a woman from my image.

Result

The alien is replaced by the woman. Motion matches. Timing stays the same. Face stays the same across frames. In one try, the legs had mixed footwear. I added one small line in the prompt and ran again. Fixed.

Workflow Groups

Video section

- Upload your clip.

- In Resolution Master, pick a supported size. Example 832 x 480.

- If your clip is vertical, click Swap to make it 480 x 832. Good for low VRAM.

Image section

- Upload your subject image.

- Match the same resolution you used for the video.

- Use Image Background Remover so the subject blends well.

Masking

- Green points on the target you will change.

- Red points on the parts you will not touch.

- Test with only the mask visible once. Then enable the rest.

Model loaders

- Two loaders are present: one High-Noise and one Low-Noise.

- You can choose FP8 or Q5 GGUF builds here.

- If you pick GGUF, keep any extra quant switch inside the node off.

- There is a VACE Model Select node. Pick one High-Noise file and one Low-Noise file in that node.

LoRA section

- Load the Lightx 4-step LoRAs you already use.

- Optional: add HPS v2 LoRA for human preference tuning. Start with small weights.

Text and VAE

- Use the matching text encoder and VAE for your build.

- Write your positive prompt in the text encoder node.

Sampling

- Keep it simple.

- 1 step base without LoRA.

- Then 3 + 3 with LoRA.

- Very low VRAM? Bypass the 1-step base. If you can keep it, it improves motion stability.

Color match

- Turn on color match so the inserted subject fits the plate.

- If tones look off, set strength to 0.

Upscale

- Optional block at the end. Pick an upscaler and run for a sharper final.

Files you need

Put these in the right ComfyUI folders so the nodes can find them fast. If a node still cannot see a file, restart ComfyUI and check the loader path once.

Base models

Text to Video (T2V)

- GGUF builds: QuantStack Wan2.2 T2V A14B GGUF. Folder:

ComfyUI/models/. Hugging Facediffusion_models - FP8 builds: Kijai WanVideo fp8 scaled (T2V). Folder:

ComfyUI/models/diffusion_models(use Load Diffusion Model or the WanVideo loader). Hugging Face

VACE (video editing and masked swap)

- GGUF builds: QuantStack Wan2.2 VACE Fun A14B GGUF. Folder:

ComfyUI/models/. Hugging Facediffusion_models - FP8 builds: Kijai WanVideo fp8 scaled (VACE). Folder:

ComfyUI/models/diffusion_models. Hugging Face

LoRAs

- Lightx 4-step LoRAs for T2V. Folder:

ComfyUI/models/loras. Hugging Face - Wan2.2 Fun Reward LoRAs from Alibaba PAI (optional quality tuning). Folder:

ComfyUI/models/loras. Hugging Face

Compare Wan 2.2 FP8 vs Q5 GGUF test

Now I switched to Q5 GGUF with the same mask and same resolution.

Timing and VRAM usage

On my PC the first pass, High Noise without LoRA, wraps up in around 31 seconds and take roughly 23 to 24 GB of VRAM. After that the High Noise pass with LoRA at three steps takes close to 38 seconds and sits near 12 to 13 GB. The final Low Noise pass with LoRA at three steps is about 51 seconds. Together, for 97 frames, the render lands around 1 minute 32 seconds start to finish.

Output notes

- Q5 Result Looks good similar to fp8.

- If you want extra detail, turn on the upscale group. My 97-frame upscale took around 48 minutes. The full frame gets sharper. Eyes may still look soft if the source eyes are soft.

Second example with a T-shirt design

New video. A woman walks on the street.

New subject image. Another woman with a T-shirt that says “excuse me”.

Goal

Swap the subject and keep the same T-shirt design.

Steps

- Mask cleanly. Green circles on the subject. Red on the background.

- Run the model groups first. Keep upscale off for the first pass.

Result

The swap is clean. The T-shirt text is close but not perfect.

I add a small line in the prompt about shoes. I remove a side cape I do not want.

Run again. Now “excuse me” is readable. Shoes are correct.

The graphic is about 98 percent same. To reach 100 percent, try one or two tiny prompt tweaks and re-run.

FAQ

Do I need High-Noise and Low-Noise models

Yes. The early steps need High-Noise. The fine detail needs Low-Noise. That mix keeps motion clean and stable.

What sampler steps should I keep

Use 1 step without LoRA for the base. Then 3 and 3 with LoRA. This is fast and stable on low VRAM.

When should I use color match

Keep it on by default. If skin or clothes look strange, set it to zero and try again.

Hi, unfortunately i got a loop error? :”Loop Detected 296,265,” , can you tell me what that is and to solve it?

Hello Esha,

I am using your thankful json file. Only issue is the following error.

May I ask you to review this for me?

Sampling 101 frames at 480×832 with 1 steps

0%| | 0/1 [00:00<?, ?it/s]Error during model prediction: 'WanModel' object has no attribute 'vace_patch_embedding'

0%| | 0/1 [00:13<?, ?it/s]

Error during sampling: 'WanModel' object has no attribute 'vace_patch_embedding'

!!! Exception during processing !!! 'WanModel' object has no attribute 'vace_patch_embedding'

Traceback (most recent call last):

File "C:\Users\RAT\AppData\Local\Programs\@comfyorgcomfyui-electron\resources\ComfyUI\execution.py", line 496, in execute

output_data, output_ui, has_subgraph, has_pending_tasks = await get_output_data(prompt_id, unique_id, obj, input_data_all, execution_block_cb=execution_block_cb, pre_execute_cb=pre_execute_cb, hidden_inputs=hidden_inputs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\RAT\AppData\Local\Programs\@comfyorgcomfyui-electron\resources\ComfyUI\execution.py", line 315, in get_output_data

return_values = await _async_map_node_over_list(prompt_id, unique_id, obj, input_data_all, obj.FUNCTION, allow_interrupt=True, execution_block_cb=execution_block_cb, pre_execute_cb=pre_execute_cb, hidden_inputs=hidden_inputs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\RAT\code\ComfyUI\custom_nodes\comfyui-lora-manager\py\metadata_collector\metadata_hook.py", line 165, in async_map_node_over_list_with_metadata

results = await original_map_node_over_list(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\RAT\AppData\Local\Programs\@comfyorgcomfyui-electron\resources\ComfyUI\execution.py", line 289, in _async_map_node_over_list

await process_inputs(input_dict, i)

File "C:\Users\RAT\AppData\Local\Programs\@comfyorgcomfyui-electron\resources\ComfyUI\execution.py", line 277, in process_inputs

result = f(**inputs)

^^^^^^^^^^^

File "C:\Users\RAT\code\ComfyUI\custom_nodes\ComfyUI-WanVideoWrapper\nodes.py", line 4074, in process

raise e

File "C:\Users\RAT\code\ComfyUI\custom_nodes\ComfyUI-WanVideoWrapper\nodes.py", line 3970, in process

noise_pred, self.cache_state = predict_with_cfg(

^^^^^^^^^^^^^^^^^

File "C:\Users\RAT\code\ComfyUI\custom_nodes\ComfyUI-WanVideoWrapper\nodes.py", line 2912, in predict_with_cfg

raise e

File "C:\Users\RAT\code\ComfyUI\custom_nodes\ComfyUI-WanVideoWrapper\nodes.py", line 2813, in predict_with_cfg

noise_pred_cond, cache_state_cond = transformer(

^^^^^^^^^^^^

File "C:\Users\RAT\code\ComfyUI\.venv\Lib\site-packages\torch\nn\modules\module.py", line 1773, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\RAT\code\ComfyUI\.venv\Lib\site-packages\torch\nn\modules\module.py", line 1784, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\RAT\code\ComfyUI\custom_nodes\ComfyUI-WanVideoWrapper\wanvideo\modules\model.py", line 2424, in forward

vace_hints = self.forward_vace(x, data["context"], data["seq_len"], kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\RAT\code\ComfyUI\custom_nodes\ComfyUI-WanVideoWrapper\wanvideo\modules\model.py", line 1733, in forward_vace

c = [self.vace_patch_embedding(u.unsqueeze(0).float()).to(x.dtype) for u in vace_context]

^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\RAT\code\ComfyUI\.venv\Lib\site-packages\torch\nn\modules\module.py", line 1962, in __getattr__

raise AttributeError(

AttributeError: 'WanModel' object has no attribute 'vace_patch_embedding'. Did you mean: 'patch_embedding'?

Prompt executed in 144.10 seconds