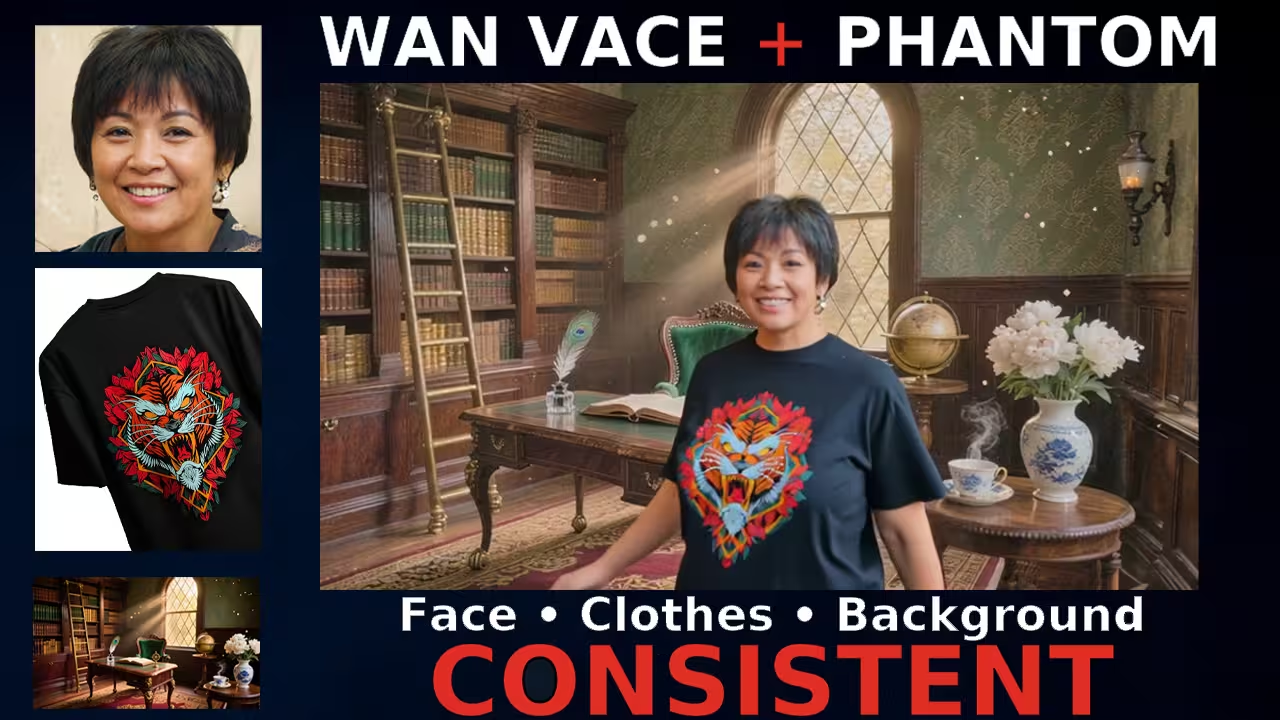

I’ve been working on the new Wan Vace Advanced Workflow inside ComfyUI, and this one feels like a major upgrade. It mixes the Wan 2.1 Vace model with Phantom V2 to produce realistic motion without the usual flicker or face drift.

If you’ve ever tried to make a short video from three images and noticed the face or clothes changing between frames, this workflow fixes that completely. Once you connect the models the right way, every frame looks like part of one continuous shot.

Setting Up the Workflow

I started by loading the Wan Vace Advanced Workflow file into ComfyUI.

After opening it, I saw a few red nodes on my first run. That usually means some custom nodes are missing.

I went to the Manager on the top bar, clicked Install Missing Custom Nodes, and waited for the downloads. Once they were installed, I restarted ComfyUI. When it came back up, the red nodes were gone and the workflow was ready.

This version adds new video nodes made especially for Wan’s video models. The extension came from drozbay on GitHub, and it improves how the Wan 2.1 video models run inside ComfyUI.

How Vace and Phantom Work Together

In this setup, two models work side by side.

The Wan Vace model handles the camera motion, lighting, and full-frame realism.

Where The Phantom model make face consistant and outfit so they don’t change .

Without Phantom, you often see tiny drifts or changes in the face and cloth The balance of both models gives a natural look. consistant face and cloth and background.

Two Ways to Add Motion

This workflow gives you two motion options.

- First method: Spline Editor.

- Here you can draw your camera path directly. I made a short arc path Connect with Wan Vace Phantom Sample V2

- Second method: Motion Capture Video.

- You can upload any short video, extract the skeleton motion, and this also connect with Wan Vace Phantom Sample V2

Connecting the Three Images

For this test, I used three images.

One for the face, one for clothing, and one as the background reference.

I connected the Has Background image to Wan Vace Phantom Sample V2, which keeps the environment stable — walls, furniture, and light direction all stay matched.

Then, I loaded the face image and cloth image for Phantom, and the background image for Wan Vace. This keeps the subject and surroundings consistent during the whole sequence.

Before the First Run

First I select the Spline Editor, I created a small motion path and Now I set:

- Resolution 832 × 480

- Frame Count 97

- FPS 24

If your GPU has less memory, you can safely lower it to 81 frames and 16 FPS. It still looks smooth while using less VRAM.

Files You Need to Download

Before running, make sure you’ve placed the model files correctly:

- Base Models →

ComfyUI/models/diffusion_models - LoRA Files →

ComfyUI/models/lora

For this project, I used two LoRAs:

CausVid – allows lower step counts (around 8 to 12 steps with CFG 2.0 to 3.0)

T2V Extract – reduces noise and keeps skin smooth.

I used 12 steps and CFG 2.5 for both. If your GPU is weaker, bring CFG down to 2 or steps to 8 for faster generation.

In the scheduler, Beta 57 works best, but the default Beta scheduler also runs fine.

First Example Test

For my first try, I used only the Spline Editor and disabled Other Group Motion capture.

I uploaded a woman’s face image, a dress image, and my library background.

My prompt:

A woman in her 50s with short dark hair wearing a pink dress stands beside a desk in a Victorian-era library at golden hour. She rests her hand on the desk, looks up, extends her hand to shake, and smiles at the viewer.

When I clicked Run, the result honestly surprised me.

The face matched my reference image of face. The dress had the same and even deatils also match. and the background looked more realistic than the reference image.

Like Lighting was natural. The sunlight came through the window just right. The reflections on the desk looked real.

Testing Motion Capture

Next, I disabled the Spline Editor and enabled the Control Motion Group.

I uploaded a short clip of a boy walking toward the camera to capture the motion.

When I ran it, my subject copied that walk exactly. The face stayed stable, the clothes didn’t shift, and the steps looked smooth.

This showed me that both motion types — manual and captured — blend beautifully with the Vace and Phantom pair.

Testing Motion Capture

Next, I disabled the Spline Editor and enabled the Control Motion Group.

I uploaded a short clip of a boy walking toward the camera and capture the motion.

When I ran it, my subject copied that walk exactly. The face stayed stable, the clothes didn’t shift.