Works with style references. Simple setup. Clear outputs.

Contents

QwenEdit InStyle basics in plain words

How to prompt (what to type)

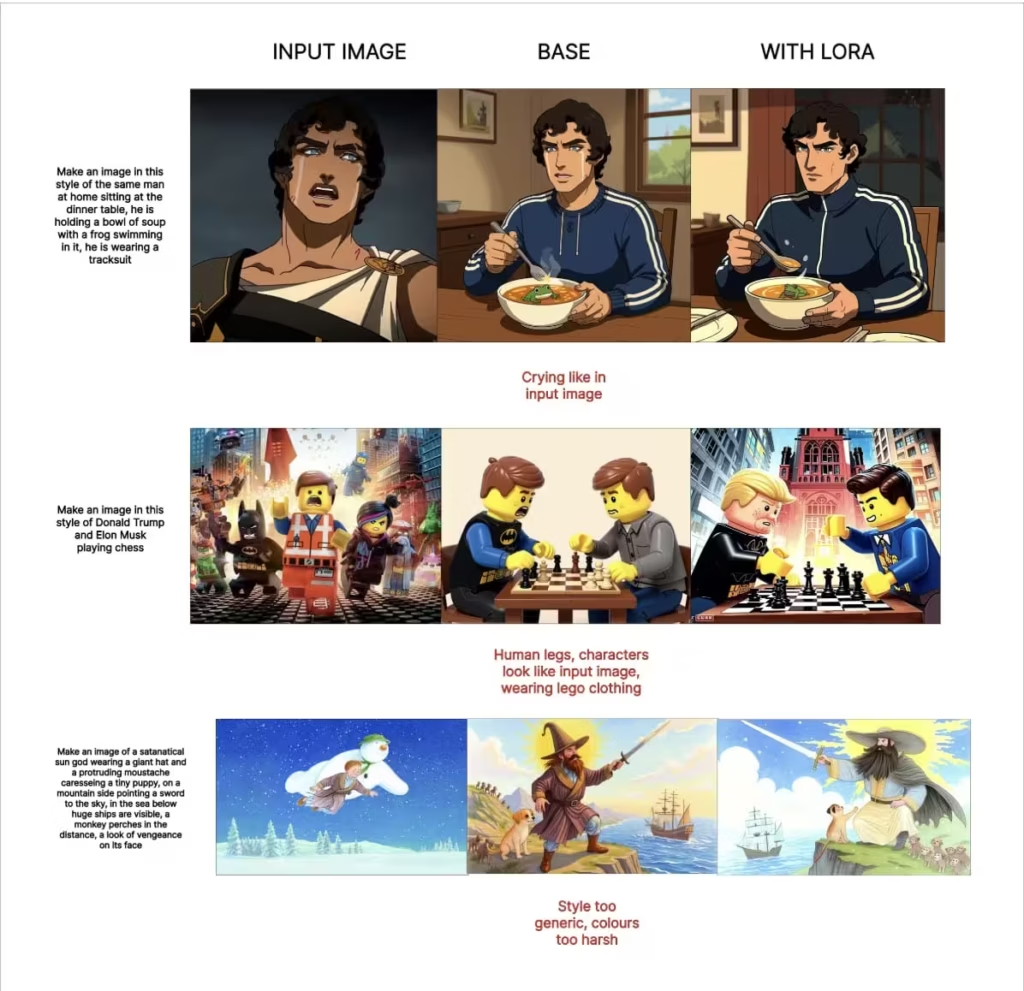

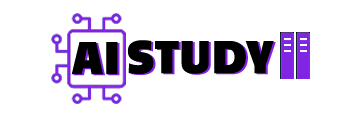

What it’s good at (and where it slips)

Strengths

Can struggle

Training set (what it used)

Dataset: https://huggingface.co/datasets/peteromallet/high-quality-midjouney-srefs

Thanks a lot, you are very precious for me.