A new LoRA pack called Pusa Wan2.2 V1 is now live on Hugging Face. It adds a high-noise and a low-noise adapter to the Wan 2.2 T2V A14B model so creators can do text to video, image to video, start-end frame fills and video extension with just 4 inference steps using LightX2V.

Why this matters

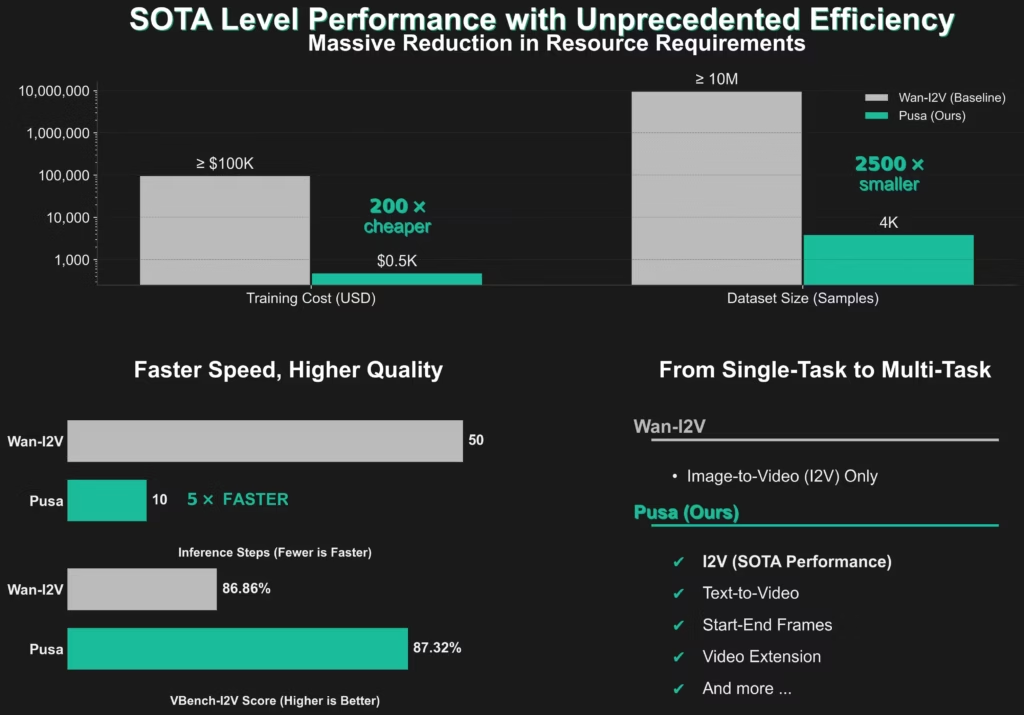

The LoRA uses a method called Vectorized Timestep Adaptation. This method improves control over motion and timing yet keeps the base model’s quality. The authors of the PUSA V1 paper claim they reach strong results with very low training cost by leaning on this technique.

What is inside

Pusa Wan2.2 V1 targets the Wan2.2 T2V A14B base which uses a Mixture-of-Experts DiT backbone. The model card lists separate adapters for high-noise and low-noise phases and shows example settings for common tasks. The page also highlights compatibility with LightX2V for 4-step runs.

How to use it quickly

Download the Wan 2.2 A14B base then fetch the two Pusa LoRA weights. In the sample commands provided, the authors set num_inference_steps to 4, cfg_scale to 1 when using LightX2V, and recommend high_lora_alpha around 1.5 and low_lora_alpha around 1.4. The README shows complete command lines for image to video, start-end frames and text to video.

Paste this into your article

Download Pusa Wan2.2 V1 LoRA

Base model required Wan-AI Wan2.2-T2V-A14B.

- Direct download high_noise_pusa.safetensors 4.91 GB https://huggingface.co/RaphaelLiu/Pusa-Wan2.2-V1/resolve/main/high_noise_pusa.safetensors Hugging Face

- Direct download low_noise_pusa.safetensors 4.91 GB

https://huggingface.co/RaphaelLiu/Pusa-Wan2.2-V1/resolve/main/low_noise_pusa.safetensors Hugging Face

License Apache 2.0 on the model page. Hugging Face

Thank you, Esha, for this excellent presentation. Once again, it is comprehensive and detailed. Quick question: do you have an example workflow to suggest? Thank you.

Is it strictly for T2V or does it work for I2V as well?

yes it will work

Can you pleas add the Workflow?