I didn’t expect this update to drop so fast — but Qwen-Image just got native support inside ComfyUI, and it’s working better than I thought.

If you’ve been holding out for something that can render long paragraphs of text inside an image — not just English, but full multilingual layouts — this is the one. The Qwen team dropped their 20B MMDiT model a while back, and now you can run it locally, straight through Comfy. No hacks. No duct tape.

Just download, load the model, and it’s ready.

What Qwen-Image Actually Does

At a high level, Qwen-Image is focused on one thing: high-fidelity text rendering inside images. Like, full-on structured blocks of Chinese and English text that actually hold up when you zoom in.

I tried a few of the sample prompts from the release, and it’s not just poster-quality stuff. It can do layout-driven outputs — things like magazine covers, billboard mockups, and UI-style screenshots with mixed fonts and multi-language labels. And it handles all of that without mangling characters or spacing.

You can grab both versions right here on Hugging Face.

The fp16 version worked fine for me on a 32GB card, but more on that below.

What About VRAM?

I tested qwen_image_fp8 on a 4090 with 24GB VRAM. First run took about 94 seconds, second run dropped to 71. It used around 86% of available memory. If you go with the heavier bf16 version, expect 96% usage and a longer wait — closer to 5 minutes for first-time generation.

That said, people in the community got it working on 16GB cards. One user said it ran just fine, just not super fast — comparable to WAN 2.2 T2I. If you’re getting black images or weird results, it’s probably a node mismatch or older ComfyUI version. Make sure you’re running the latest Nightly and your custom nodes are updated too.

There’s more detail on GGUF conversions and dual-GPU setups floating around here and in the Reddit thread.

A Few Quirks to Watch For

It’s not totally flawless though. A few people reported black images or weird loading errors — especially if you’re running an older ComfyUI build or forgot to update certain nodes.

There’s one common issue where you get a CLIPLoader error saying something like:

“type: ‘qwen_image’ not in list…”

That just means your Comfy version doesn’t recognize the model type. Run the update_comfyui.bat file — not just through the manager. You might also need to update your GGUF nodes if you’re running the quant versions.

Some users also mentioned issues if --use-sage-attention or --fast flags were on — those can break the output. Just disable them and it usually clears up.

And yeah, if you’re only getting partial results or strange glitchy shapes in parts of the render, that’s likely a resolution or CFG mismatch. People seem to get the best results with something like:

Or you can go the safer route with CFG=1 and more steps. Depends on your GPU and how patient you are.

I made a quick video tutorial showing Qwen-image workflow inside ComfyUI. You can watch it

How to Set Up Qwen-Image in ComfyUI (The Right Way)

If you want to try Qwen-Image locally inside ComfyUI, the setup’s pretty straightforward — as long as you’re on the latest Dev build. You don’t need any custom scripts or hidden configs. Just drop the files in the right spots and it’ll run like any other model.

Which Qwen Model to Use?

There are two main versions available:

You can grab the official GGUF versions here on Hugging Face. GGUF models are available from Q2 to Q8, and here’s how it works: the lower the Q, the less VRAM you need.

Both models are available on Hugging Face here. Just pick the one that matches your GPU setup.

Once downloaded, save the file into:

ComfyUI/models/diffusion_models/

Text Encoder for Qwen

This model uses a custom text encoder built for multilingual text generation:

You only need this one. It handles English, Chinese, Japanese, Korean, Italian, and more.

Drop it here:

ComfyUI/models/text_encoders/

VAE for Qwen-Image

You’ll also need the matching VAE file:

Put this one into:

ComfyUI/models/vae/

That’s it for model setup.

In your workflow, just load the files into the usual nodes:

Set your resolution in the EmptySD3LatentImage node, write your prompt into the CLIP Text Encode node, then hit Queue.

No extra configs needed — it just works if you’re up to date.

Quick Fix for Black Images in Qwen-Image (ComfyUI)

If you’re getting just a black screen after generating, here’s what usually causes it — and how to fix it fast:

1. Make sure you’re using the latest ComfyUI (Dev/Nightly version)

The Stable or Desktop versions don’t support Qwen-Image yet.

2. Check your CLIP/Text Encoder file

Qwen-Image only works with this exact file:

Fix: Make sure this file is inComfyUI/models/text_encoders/

and you’re loading it correctly in the Load CLIP node.

3. Disable –use-sage-attention or –fast if you’re using those

Some launch options break Qwen-Image.

If you’ve done all that and it’s still black, it’s almost always a mismatch between the model and CLIP encoder or an outdated ComfyUI version.

Real Examples — Layouts, Fonts, and More

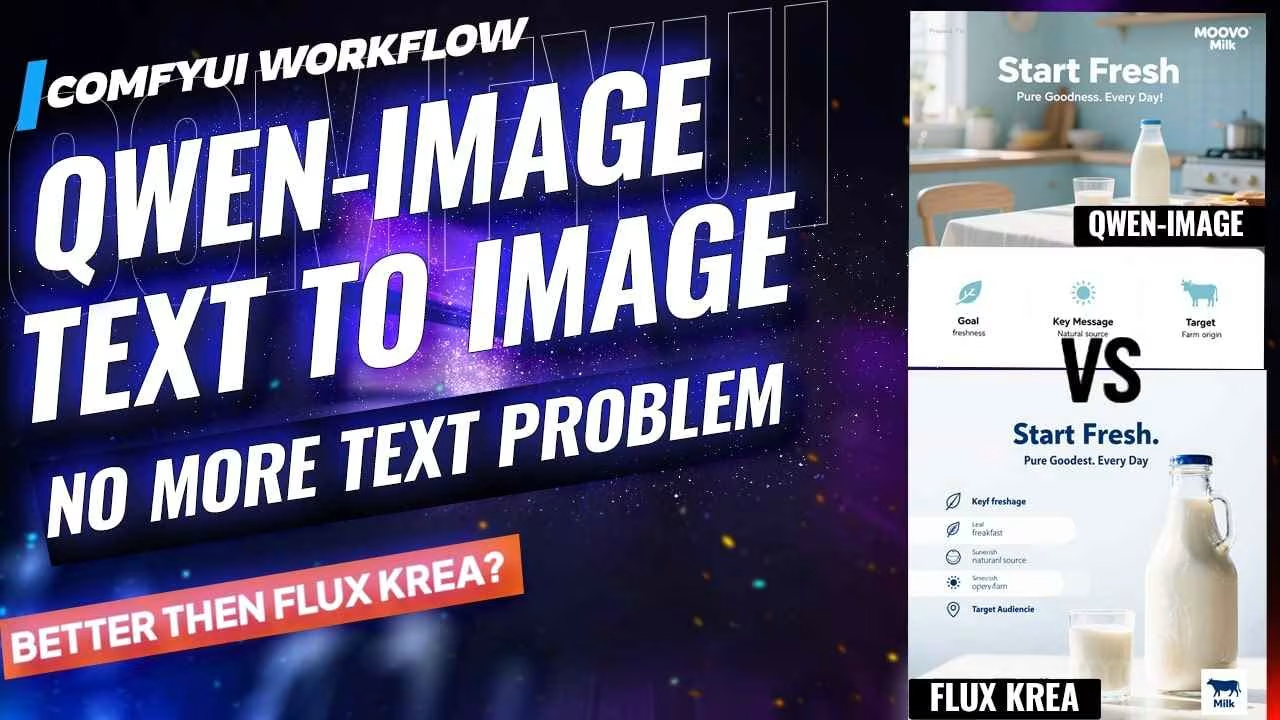

Let’s test this model with a real example.

Since Qwen-Image is strongest at text rendering, I wanted to try something layout-heavy — like a full marketing banner.

Here’s the prompt I used:

Layout with creamy white and soft shy blue tone.

Large title: START FRESH

Tagline: Pure Goodness. Every Day.

Three simple sections: Goal, Key Message, Target Audience.

Light icons: leaf (freshness), sun (natural source), cow (farm origin).

Logo: MOOVO Milk — simple and stylish in the corner.

I hit Generate — and honestly, the result shocked me.

The background tone came out perfectly — soft cream and blue. The title showed up exactly where I wanted, and the tagline sat just below it, clean and professional.

But here’s the part that really impressed me:

It made the three content sections — Goal, Key Message, Target Audience — just like I described. No text overlapping. No alignment issues. It even added the icons: leaf, sun, and cow — all placed cleanly.

It looked like a real ad. No broken letters. No weird spacing.

Then I tried the same prompt in Flux Krea (FP16 version).

The layout looked decent at first glance — but the spelling was off. About 70% of the text had errors or garbled characters. The overall structure felt close, but it just didn’t land.

Qwen-Image clearly did better.

Next, I tested the Qwen-Image Q8 GGUF version with the same banner prompt.

The result? Different style, but still clean. Around 95% of the text was correct.

A couple issues though — the word “Audience” and the phrase “Farm Origin” were slightly misplaced. And “Target” was missing entirely. Still, much better than I expected from a Q8 quant model.

Let’s make this test harder.

I gave it a different kind of prompt this time — something poetic:

A man is standing in front of a window.

He is holding a yellow paper.

On the paper is a handwritten poem:

‘A lantern moon climbs through the silver night,

Unfurling quiet dreams across the sky,

Each star a whispered promise wrapped in light,

That dawn will bloom, through darkness wanders by.’

Also, there is a cool cat sitting at the door.

When I ran this, I didn’t expect much. But it actually rendered every line of the poem — clearly, and in the right place. The handwriting looked clean. You could read each word.

Two small issues: the word “whispered” was slightly hard to read. And “dreams” looked a little blurry. But honestly? Still readable. I tried increasing the steps — no change. Higher resolution — same thing. So yeah, 20 steps worked best here too.

Then I tested that same prompt with Flux Krea. Didn’t come close. The text didn’t show up right at all. So again, Qwen wins — even on complex text with style and emotion.

Realism Tests

I gave it a simple prompt:

Generate an image of a girl sitting on a stairwell.”

And it shocked me. The result didn’t look AI-generated at all. No plastic skin, no weird lighting. It actually looked like a real photo. Natural shadows, good color — even the background felt right.

Just to be fair, I tried the same prompt in Flux Krea. And it surprised me again — it also nailed the realism. The output looked like a movie still. Very human, very clean. Honestly, I’d call this one a tie. Both did great.

One more test: hand generation.

This is where a lot of models mess up.

So I gave it a simple portrait prompt — a woman showing five fingers.

Qwen-Image got it right. Five fingers. Looked natural.

Flux Krea? First try — six fingers. I gave it another shot, and yeah, second time it fixed itself. But still, Qwen got it perfect on the first run.

Hi ESHA,

Thanks for your efforts. Yes, disabling Sage Attention fixes black image output.

Any chance you can create a qwen-image i2i workflow?

Thanks from Canada,

RTS