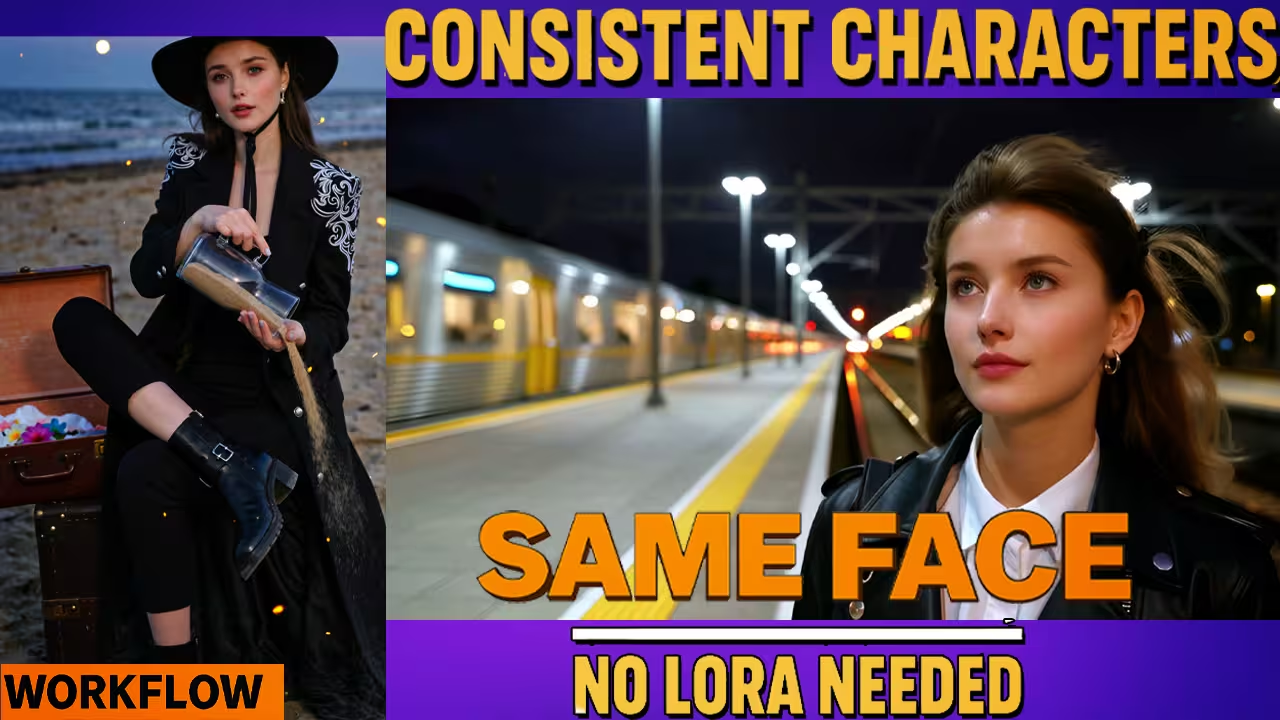

I wanted one thing. A face that stays the same in every frame while the person moves like a real shot from a camera. I tried the new Lynx workflow inside ComfyUI and it did exactly that. No training needed. No custom LoRA for the character. Just a clean workflow and a few settings.

Lynx is made to keep identity stable from one image across a full video. It adds small adapters for identity and reference detail. That is why the face does not melt or drift while the body moves and light shifts. If you are new to it, Lynx comes from ByteDance and runs on top of the Wan video model family. It uses light ID adapters and a ref adapter to carry facial features across frames.

Below is exactly how I set it up in ComfyUI and what values gave me the most natural results.

Step 1. Install or update the video nodes you need

First I updated ComfyUI to the latest build

Now for Lynx. There are two common ways people add it.

- You can use wrapper nodes that expose Lynx embeds in ComfyUI. Kijai’s WanVideoWrapper is the popular one and it has active threads about Lynx support. If you already use this wrapper, update it from GitHub.

- Some builds expose a node called WanVideoAddLynxEmbeds. If you see this in your node list after updating, you are good. It plugs into Wan video and adds the Lynx identity and reference signals.

Step 2: Files You Need to Download

To make the Lynx workflow run properly in ComfyUI, you need these model files. This is how I save them on my system.

ComfyUI/

models/

diffusion_models/

Wan2_1-T2V-14B_fp8_e4m3fn_scaled_KJ.safetensors

Lynx/

Wan2_1-T2V-14B-Lynx_full_ip_layers_fp16.safetensors

Wan2_1-T2V-14B-Lynx_full_ref_layers_fp16.safetensors

lynx_full_resampler_fp32.safetensors

text_encoders/

umt5-xxl-enc-bf16.safetensors or umt5-xxl-enc-fp8_e4m3fn.safetensors

lora/

Wan2.2-Fun-A14B-InP-high-noise-HPS2.1.safetensors

Notes I follow

- Pick one text encoder build. Use BF16 if you have the VRAM. Use FP8 if you need a lighter run.

- Keep Lynx files together. The IP layers keep identity. The Ref layers keep texture. The resampler helps stability.

- After saving, restart ComfyUI or click Refresh Models so nodes can see the files.

Download Links

Wan 2.1 base model FP8 scaled

https://huggingface.co/Kijai/WanVideo_comfy_fp8_scaled/blob/main/T2V/Wan2_1-T2V-14B_fp8_e4m3fn_scaled_KJ.safetensors

HPS LoRA (skin and color finish)

https://huggingface.co/alibaba-pai/Wan2.2-Fun-Reward-LoRAs/blob/main/Wan2.2-Fun-A14B-InP-high-noise-HPS2.1.safetensors

Lynx components (IP, Ref, Resampler) folder

https://huggingface.co/Kijai/WanVideo_comfy/tree/main/Lynx

Text encoder BF16

https://huggingface.co/Kijai/WanVideo_comfy/blob/main/umt5-xxl-enc-bf16.safetensors

Text encoder FP8

https://huggingface.co/Kijai/WanVideo_comfy/blob/main/umt5-xxl-enc-fp8_e4m3fn.safetensors

I use two LoRA: LightX2V and HPS

I keep two LoRA in this setup. One is for speed. One is for color and tone. They do different jobs, and that is why the results feel clean even when the face stays locked by Lynx.

Step 3. The two values that control identity and detail

In the Lynx embed node I get two sliders.

- IP Scale controls how strong the identity lock is.

If I set it too high the face looks stiff. If I set it too low the face drifts. My safe range is 0.6 to 0.7. This keeps the same person and still allows natural expressions. - Ref Scale controls how much texture to borrow from the reference image.

At 0.6 the model keeps the core look and still adapts to lighting. At 0.8 to 0.9 the face looks very close to the source photo. If I need to preserve a small tattoo or a mole I push it higher for that shot.

These two sliders do most of the work. Lynx was designed with ID adapters and a ref adapter for this reason.

Step4. Test scenes

Cozy cafe

Prompt

A person sits next to a large window in a cozy cafe. City lights blur through the rain. They glance at their phone and laugh.

Settings

IP Scale 0.6. Ref Scale 0.7. Steps 6. Normal CFG.

Result

The same face in every frame. The laugh looks natural. The window reflection does not flicker. The skin tone stays stable when the head turns a little.

Rainy platform at night

Prompt

Close up on a rainy platform. The person faces the camera. They close their eyes and feel the wind. A train passes behind with neon reflections on the ground.

Settings

IP Scale 0.65. Ref Scale 0.6. Steps 6.

Result

The hair moves a bit with the wind. Eyes close naturally. The jawline stays the same as the reference image. The neon flickers but does not break the face.

Preserve a small feature

I used a source image with a small tattoo near the eye.

With Ref Scale at 1.0 the tattoo stays in every frame.

With Ref Scale at 0.8 the face stays the same but the hair tone adapts to the scene light. I use 0.8 when I want a cinematic look with small color shifts.

Why Lynx preserves faces so well

Lynx was built for personalized video from a single image. The model sits on top of a diffusion transformer base and uses light adapters for identity and for spatial detail. In plain words it keeps who the person is and it carries local details like eyebrows and skin texture across time. That is why the person feels the same even when the camera moves.