If you’ve been waiting for the next big leap in open-source AI video generation, today’s your lucky day.

Because WAN Phantom isn’t just another incremental update—it’s a complete game-changer for character consistency and virtual try-on features.

I’ve tested dozens of AI video models over the years. Most struggle with simple things like keeping a character’s face stable across frames or handling clothing changes realistically.

But after putting Phantom through its paces, I can confidently say it solves problems that have plagued other models for years.

Here’s what makes it different:

- Unmatched character consistency – Faces stay recognizable even during complex movements

- True virtual try-on capability – Swap outfits on characters while preserving all other details

- Blazing fast generations – Thanks to optimizations like CosVidLora V2

I’ll walk you through real-world examples showing exactly how it works, then break down the installation process step-by-step.

Putting Character Consistency to the Test

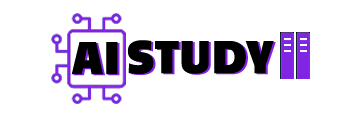

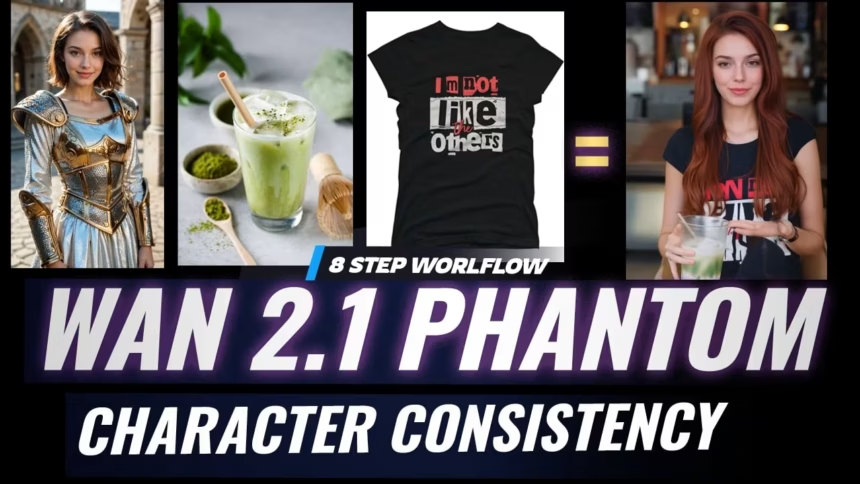

My first experiment was simple but telling—could Phantom maintain a character’s identity while changing outfits?

I started with an image of “WOMEN” (our test character) and gave her a new graphic t-shirt to wear—one with bold, distressed red-and-white text reading “I’m not like the others.”

Here’s how I structured the prompt:

- Character details: “She is wearing black graphic t-shirt that says I’m not like the others in bold”

- Primary action: “Taking a selfie video”

- Environment: “Concert stadium with colorful flashing lights and energetic vibe”

- Emotional context: “Half-smiling, hands relaxed by her sides”

The results blew me away.

Not only did Phantom perfectly maintain WOMEN’s facial features across all frames, but it handled the new clothing seamlessly. The background details matched exactly what I’d described—flashing lights, concert energy—without any of the weird artifacts or distortions I’ve come to expect from other models.

Even with interpolation turned on (which often breaks consistency), the character remained stable and recognizable.

The Speed Advantage: CosVidLora V2

What makes Phantom particularly impressive is how efficiently it runs.

The model fully supports CosVidLora (now at version 2), which dramatically cuts generation time. Where other models might require 20-50 steps for decent results, I was getting high-quality videos in just 6-10 steps with a single CFG setting.

For reference, that same concert scene generated in about 2-3 minutes on my RTX 4070. If you’re working with lower VRAM cards, you can drop the resolution to 720×480—though there is a slight quality tradeoff.

Pushing Limits With Multiple Image Inputs

For the real stress test, I fed Phantom three separate images simultaneously:

- Our WOMEN character

- A drink in a clear cup

- A typography black shirt

Using the Image Batch Multi custom node routed into Phantom’s Subject-to-Video node, the model attempted to incorporate all three elements into a single coherent video.

The results were mostly impressive:

- The shirt and drink both appeared as intended

- Character consistency held strong

- Generation time remained under 3 minutes

That said, I did notice one physics oddity—WOMEN appeared to grip the drink by the straw rather than the cup. It’s a reminder that while Phantom is groundbreaking, it’s not perfect. Complex multi-object interactions still need refinement.

Installation Guide

To run Phantom yourself, you’ll need:

- The core model files:

- VAE component:

- Text encoder (choose one):

- Optional speed boost:

For hardware recommendations:

- High-end (16GB+ VRAM): Use Phantom Q8 with UMT5 Q8 text encoder

- Mid-range (8GB VRAM): Opt for Q6 text encoder and Phantom GGUF version

Don’t forget to install CosVidLora V2—it makes a noticeable difference in generation speed.

Final Thoughts

After extensive testing, WAN Phantom stands out as the most capable open-source model for:

- Character-consistent video generation

- Virtual clothing try-ons

- Product placement scenarios

While it still has some limitations with complex physics and multi-object interactions, its strengths far outweigh these minor issues. For anyone working in AI video—whether for content creation, prototyping, or research—Phantom represents a significant leap forward.

The speed optimizations (especially with CosVidLora V2) make it practical for real-world use, not just experimentation. And the consistency improvements finally deliver on promises other models have been making for years.

If you’re ready to try it yourself, grab the files from the links above and start experimenting. Just be prepared—once you see what Phantom can do, you might have trouble going back to other video AI models.